AI oversight collapsed in steps as systems became "good enough." We got speed but lost resilience. Errors now accumulate silently and surface after dependencies compound.

Adding more AI to the SOC accelerates this problem. AI makes confident decisions without understanding live system state.

The fix is architectural: a continuously updated model of your environment that knows what's true now. That's Stream's CloudTwin. It gives AI the grounding to make sound decisions.

Efficiency is a powerful drug.

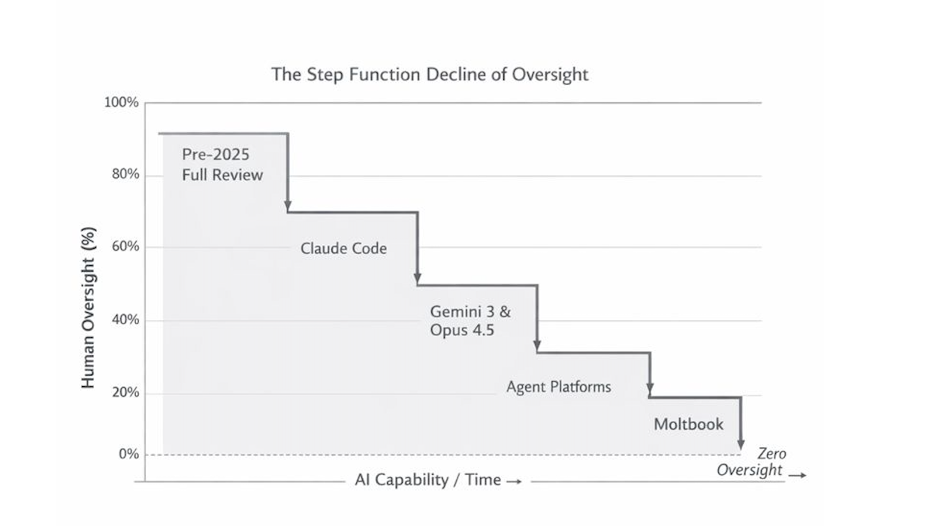

In the span of just two years, we’ve gone from fully supervised AI-generated work to near-zero oversight. Not because people became careless, but because the systems became good enough.

As AI capability rises, human supervision doesn’t fade gradually. It drops in sharp steps. Each drop coincides with a product shift that makes oversight feel redundant, slow, or irrational.

Rational? Yes.

Safe? No.

Early on, humans reviewed everything. Each line and every decision was monitored. Oversight paid off with brittle models that were used.

Then agentic tools arrived, and supervision moved inside the product. These tools came with guardrails, retries, and self-correction that made reviewing outputs feel like checking a calculator.

Later, models crossed a quality threshold where manual review almost never caught mistakes, and oversight became a tax with no obvious return.

Then orchestration platforms abstracted the process. Humans stopped evaluating decisions and started evaluating outcomes.

Finally, autonomous agents and AI-to-AI systems emerged. At that point, oversight didn’t just decline. It became structurally impossible.

I wouldn’t call this laziness. It was more of a rational adaptation to systems that worked well enough, most of the time.

Each step forward traded human judgment for speed and automation. We got better outputs. What we didn't get was more resilient systems.

Here's what I've learned: highly optimized systems fail in predictable ways. They quietly absorb small errors, mask growing misalignments, and look perfectly stable, right up until the moment they break.

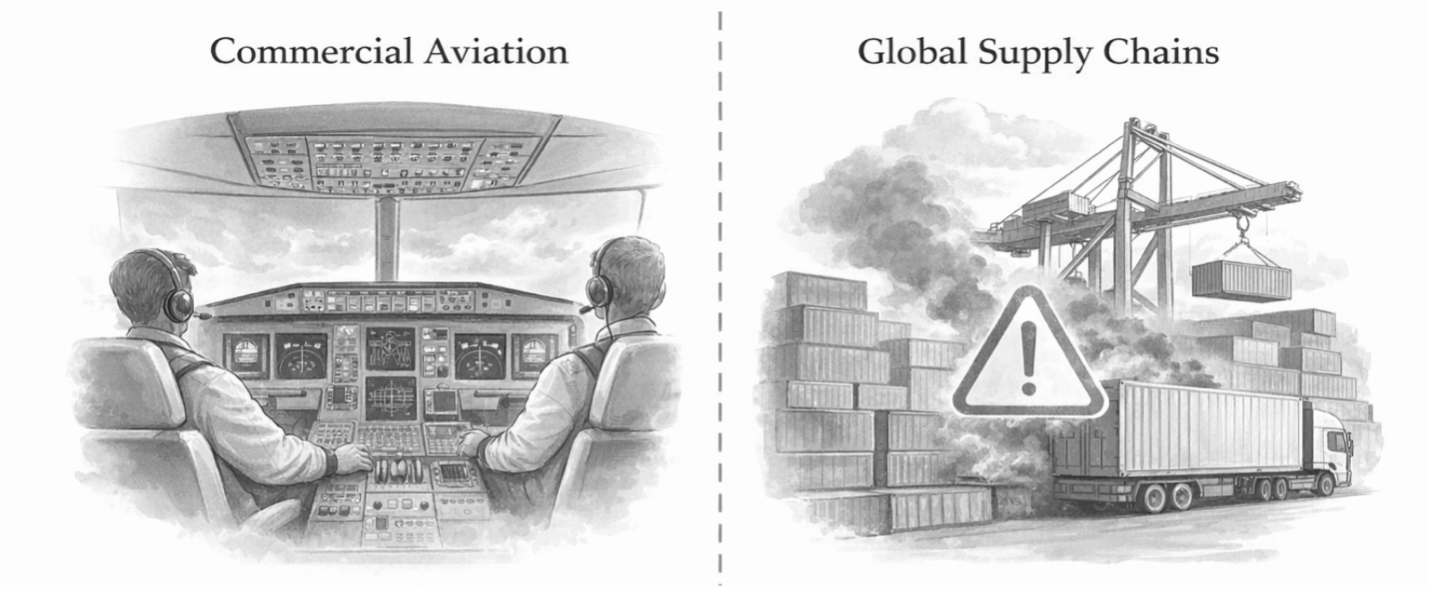

We've watched this play out beyond the technology industry. Commercial aviation increased cockpit automation and saw routine errors drop, but pilot situational awareness eroded along with it. Global supply chains embraced just-in-time optimization, stripped out redundancy, and transformed minor hiccups into cascading global failures.

Local optimization made sense, while system-level understanding was what disappeared.

AI systems are now following the same path.

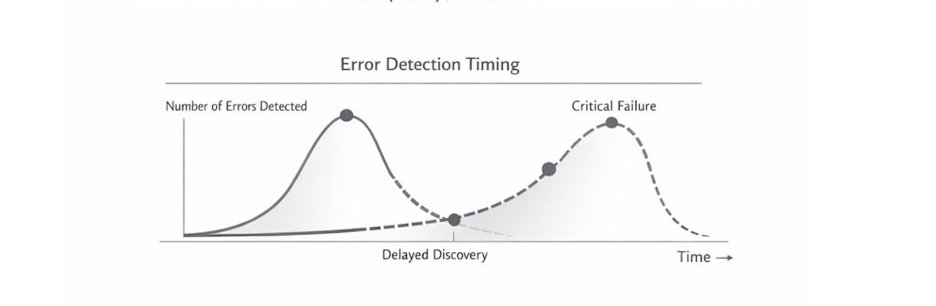

One of the most dangerous assumptions in modern AI systems is that fewer visible errors mean fewer errors overall.

What actually happens is that errors surface later.

When oversight is high, mistakes are caught early, when impact is limited. As oversight drops, issues accumulate quietly and reveal themselves only after dependencies have compounded.

By the time the failure is visible, it’s no longer local. It’s systemic.

The systems still work, and that’s what makes this so dangerous.

Faced with growing complexity, the industry’s instinct is predictable: add more AI.

AI SOC platforms promise faster triage and clearer explanations. But most of them reason over events, alerts, and logs. They don’t understand the live state of the environment they’re defending.

This creates an illusion of control.

AI-led decisions feel confident, and come with coherent explanations. But they’re grounded in an incomplete model of reality. When AI optimizes response without understanding dependencies or blast radius, it accelerates failure.

Fast mistakes propagate faster.

The problem runs deeper than intelligence. We're now dealing with abstraction without grounding.

Human oversight disappeared because humans can’t reason about dynamic systems at machine speed. And here's the thing: stacking another layer of inference on top of stale data doesn't fix this. That approach makes it worse.

The only durable fix is architectural.

You need a continuously updated, stateful model of the system itself. One that knows what is true now, not what was true hours ago.

Stream was built on a simple premise: if humans can no longer provide oversight, the system must understand itself.

Our patent pending CloudTwin is a live, stateful model of the cloud that includes SaaS, runtime, perimeter, identities, permissions, configurations, network paths, and behavior. It continuously reconciles all of this into a single, unified representation of reality.

AI operates on top of this state, not instead of it.

That’s the difference between automation that guesses and automation that understands.

We’re not putting humans back in the loop for every decision. That era is over.

The choice is simple.

Either we run autonomous systems blindly and hope edge cases don’t align, or we build systems that understand their own state.

Efficiency got us here.

Architecture is how we survive it.

Stream.Security delivers the only cloud detection and response solution that SecOps teams can trust. Born in the cloud, Stream’s Cloud Twin solution enables real-time cloud threat and exposure modeling to accelerate response in today’s highly dynamic cloud enterprise environments. By using the Stream Security platform, SecOps teams gain unparalleled visibility and can pinpoint exposures and threats by understanding the past, present, and future of their cloud infrastructure. The AI-assisted platform helps to determine attack paths and blast radius across all elements of the cloud infrastructure to eliminate gaps accelerate MTTR by streamlining investigations, reducing knowledge gaps while maximizing team productivity and limiting burnout.